Introduction

The last piece of my three part series on migrating from AWS to GCP will focus on database challenges for no downtime migrations (You can view part 2 here). This piece is the most time consuming one as the larger the database is, the more time it takes to move it around and sync it. This post will also go over the strategies and options for a no downtime migration and switchover.

MongoDB Migration

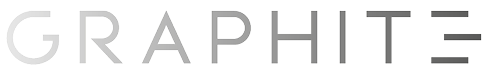

The challenges when moving a large database, especially if it’s sharded is the sheer size of it as well as the distribution of data. On our case we had a MongoDB database that was sharded by key, On a typical MongoDB a replica set is architected as follows.

Basic Configuration Primary / Secondary

The primary acts as a read/write node, while the secondaries acts as read only nodes, you can add as many as you want although performance takes a hit the more you add, as well as stalling during leader election for primaries. A simple MongoDB optimization should disable reads on the primary to have the following structure.

The Challenges with moving Data from Cloud Providers

- The main challenge is maintaining data integrity while swapping the app from AWS→GCP

- Dump and Restore: Dump the database to a file in AWS, file transfer it to GCP, recreate the database in GCP. Could be possible it takes about 3+ weeks on 5TB databases (scales linearly based on size) but the cost you pay is time and after the delta of the data that will be inserted during the dump and restore. Dump and restore does not take a point in time backup so data will not be consistent.

- Rebuilding of Indexes: Rebuilding the indexes on large datasets take a long time and cause noticeable performance degradation

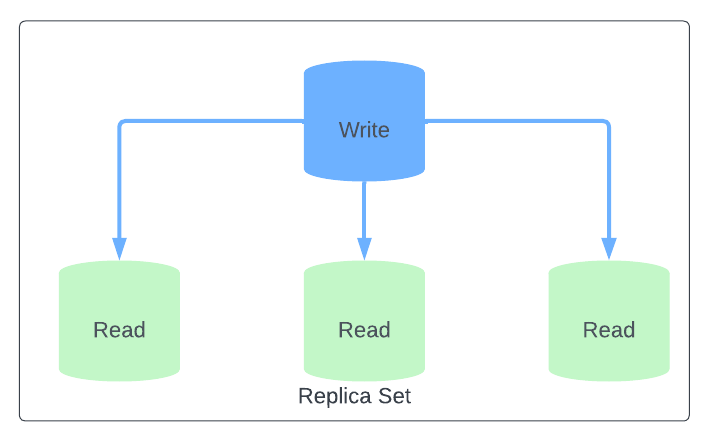

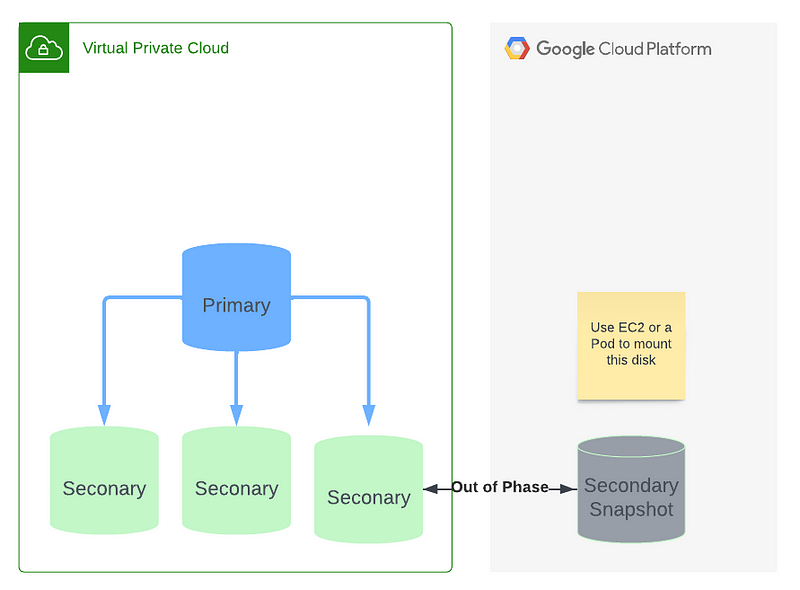

- Sync to Read Only Secondary: Create a new read only secondary and sync from the primary, this option is reliant on the VPN connection stability, poor connection could cause the sync to fail and depending on the tool, complete waste of time as the sync will need to be redone, wasting precious days of syncing. The architecture of such a setup looks like this.

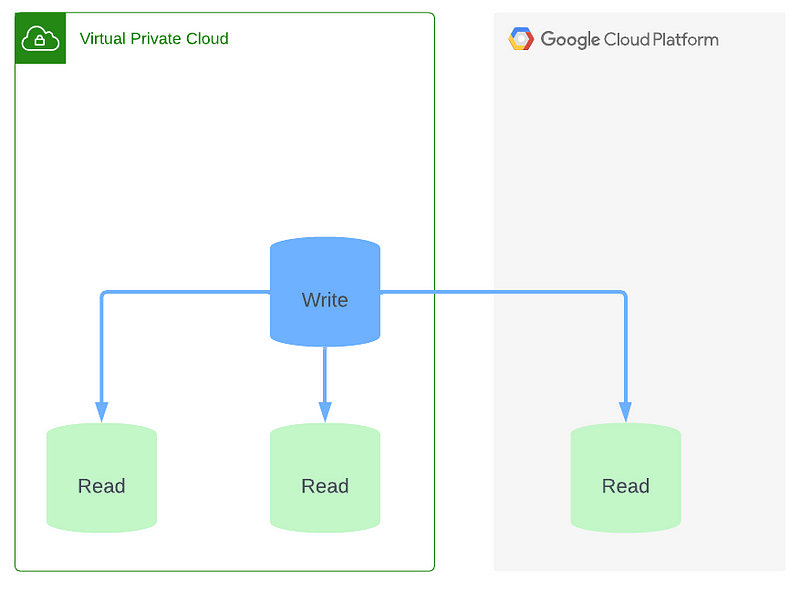

The way we solved this was merging these two solutions. Think of a 5 TB database sharded by region, disabling sharding and creating a database with only one shard on a single replica set then snapshot the entire disk and migrate the disk using a tool like Velostrata [Now Called Migrate on Compute Engine] . Once the disk has been migrated over to the other cloud provider, mount it to an EC2 machine or a pod in Kubernetes depending on your infrastructure.

Take the mounted volume and make it join the replica set over the VPN as a read only secondary. This will make it out of sync for about 2–3 days while the disk and sync within 24 hours, this solves the problem of having to constantly update with perpetual dump and restores.

The application cannot be swapped over to the new cloud provider until the data sync is complete, so having this problem solved first is a priority before moving onto the application.

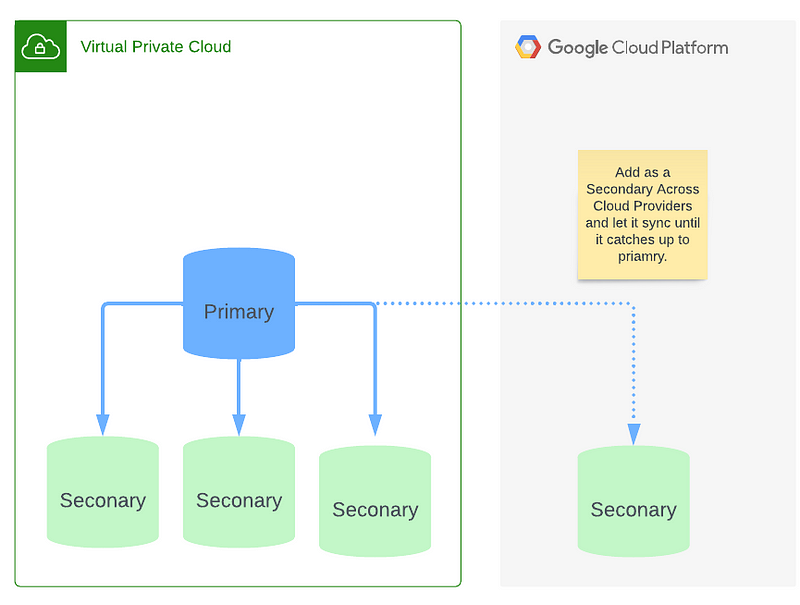

The process of data migration is illustrated as follows.

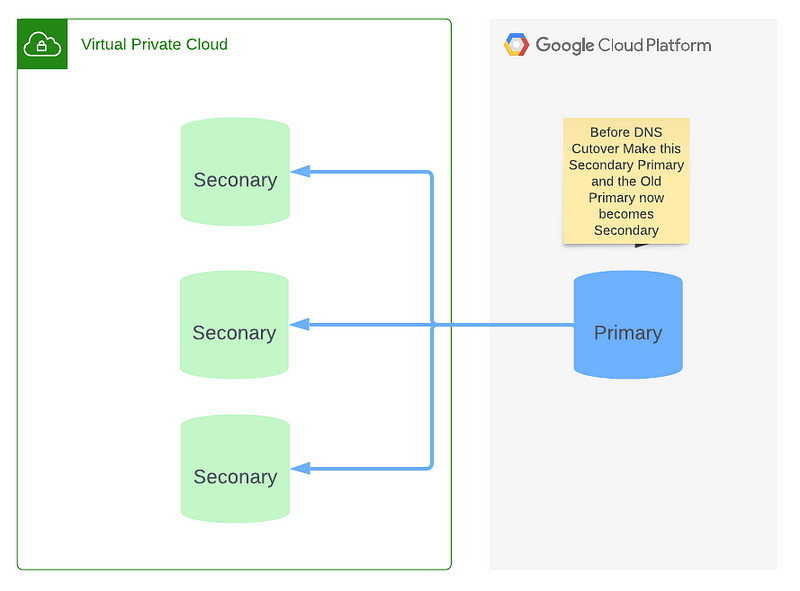

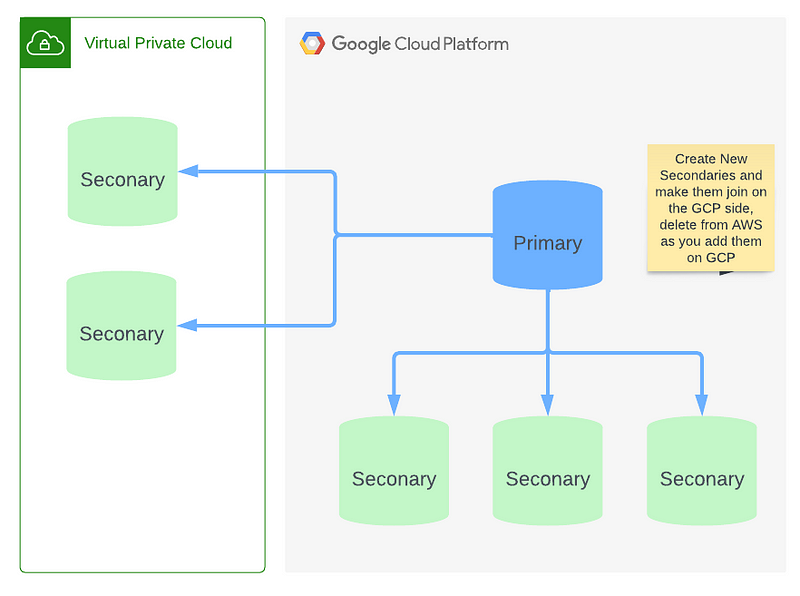

Once you have the replicas are synced you can swap the primary between the clouds, this is important because depending on which cloud provider the live traffic is being consumed, the primary must be in that cloud provider to avoid latency.

During the migration, all application writes on the GCP application will need to be sent to AWS through the VPN and into the primary, this setup will increase latency on the GCP side so be aware of that when looking at metrics.

Until such a time as you are ready to do DNS switch you will need to update the priority of the replica set member you want to make primary.

{

"_id" : "rs",

"version" : 7,

"members" : [

{

"_id" : 1000,

"host" : "aws1.example.com:27017"

},

{

"_id" : 1001,

"host" : "aws2.example.com:27017"

},

{

"_id" : 1002,

"host" : "aw3.example.com:27017"

}

{

"_id" : 1003,

"host" : "gcp1.example.com:27017"

},

{

"_id" : 1004,

"host" : "gcp2.example.com:27017"

},

{

"_id" : 1005,

"host" : "gcp3.example.com:27017"

}

]

}and from a MongoSH session you can update the priority of your replica set members to move the primary between different hostnames.

cfg = rs.conf()

cfg.members[0].priority = 50

cfg.members[1].priority = 50

cfg.members[2].priority = 50

cfg.members[3].priority = 100 # This would be gcp1.example.com in GCP

cfg.members[4].priority = 50

cfg.members[5].priority = 50

rs.reconfig(cfg)You can run that script and change the priority to make the primary switch between AWS and GCP.

One small caveat is that for this to occur you will need to have DNS forwarding between the two cloud providers as MongoDB doesn’t allow the set of IPs in the host field for rs.conf().

Conclusion

This is the last post on the Migrating From AWS to GCP Series, The challenges faced here will be homogeneous across frameworks and programming languages, this has been only a focus on infrastructure components, closer looks on pricing and costs are also relevant that should be taken into consideration. Hope this series was informative and will be enough to have a informed opinion if your company is thinking about moving to different cloud providers.