Introduction

Two of the common questions I get when doing consultancy are a) how do we take our monolithic application and turn it into smaller microservices and b) how do you migrate a platform or at least sub-components of it from one cloud provider to another? One of the most interesting projects I’ve been involved in was migrating a monolithic application from AWS to a GCP/GKE environment. I aim to cover some of the challenges faced and how we overcame them.

This is a three part series where we break up the migration into three general domains and do a deep dive of each.

Part 1 — the Network Domain

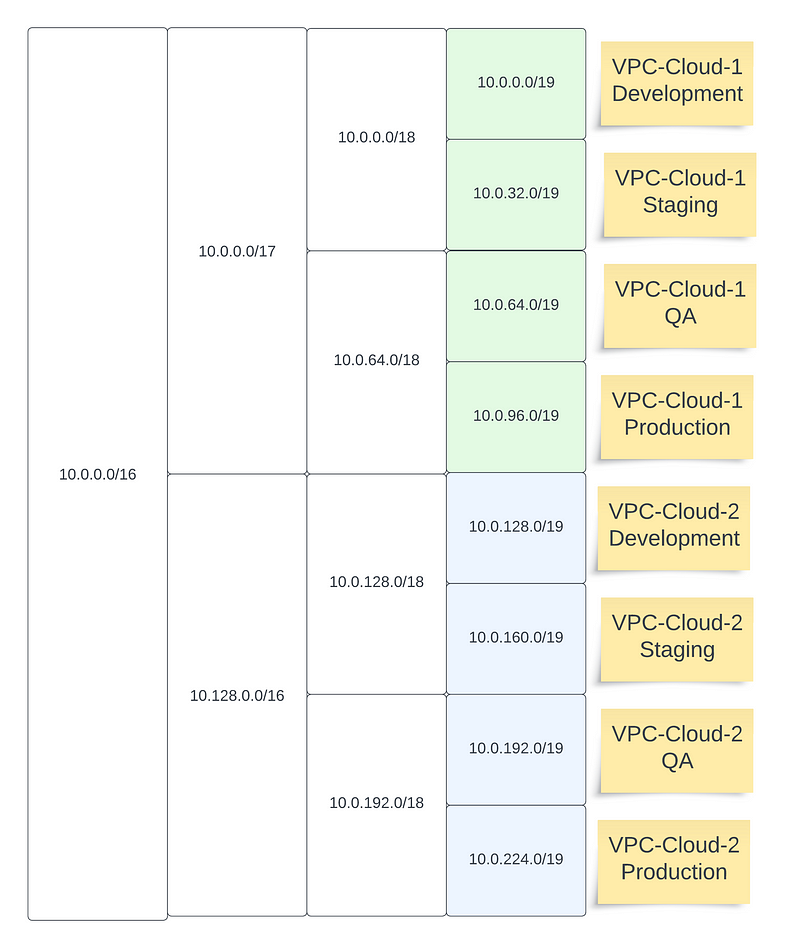

The problem of connecting application and infrastructure components between two cloud providers, can be visualized as follows.

The two cloud provider VPC IP configurations on either cloud provider, need to establish connections between. This is done via VPN Gateway on both sides. You can check how do it online.

Let us look at a few considerations when designing a solution like this.

Challenges:

- IP range collision: Each of the mentioned VPC’s would have at least one CIDR range associated with it. For the route table entries to be propagated appropriately down the tunnel sessions, care needs to be taken to ensure that these ranges do not overlap with each other.

- Figure out if all your infrastructure components can talk to each other via raw IP during the migration process; if some of your infrastructure component require DNS resolution for internal traffic then configuring a DNS forwarder will likely be needed.

- If you find yourself in the unfortunate situation of having to also setup the actual Site-to-Site VPN connectivity, it’d be worth making yourself familiar how it works, how does it handle packet routing and DNS resolution etc. But at a very high level, S2S VPNs are either statically or dynamically routed. The former, as the name suggests, relies on hand configured route table entries. The latter almost always uses the Border Gateway protocol (aka BGP) which in simple terms means the two VPN gateways (assuming they support it) will implicitly and continuously exchange information with each other. These are a combination of protocol-level messages such as keep-alive, as well as routing table entries, hence no static config is required. If a new route becomes available, it will be advertised with its peer network and they will get propagated down the route tables on either side.

Kubernetes DNS Via VPN

If your applications have historically relied on K8s’ internal DNS resolution within the cluster (such as usage of <SERVICE_NAME>.<NAMESPACE> or <SERVICE_NAME>.<NAMESPACE>.svc.cluster.local ), you’ll soon find that these will become unresolvable between AWS and GCP; this is due to the fact that kube-proxy update of iptables only occurs on nodes that are part of the node groups that make up the cluster, anything outside the cluster such as EC2 machines or compute engine instances will not have their routing tables and IP tables information updated via kube-proxy. To get around this you have to expose load balancer per service and forward CloudDNS or Route53 to a DNS server on the other cloud.

A useful tool to solve this is external-dns, which will allow you to annotate your Kubernetes services as shown below.

kubectl run nginx --image=nginx --port=80

kubectl expose pod nginx --port=80 --target-port=80 --type=LoadBalancer

kubectl annotate service nginx "external-dns.alpha.kubernetes.io/hostname=nginx.example.org."The way this will work is that any Service configured with Type:ClusterService will have to be edited into Type:LoadBalancer , this will provision a routable IP that is reachable from outside the Kubernetes cluster. Services annotated with external-dns will then trigger an automatic generation of an A record with this routable IP that can be used within both cloud providers.

Quotas and costs are a point of contention here depending if your application has a lot of microservices that need to be fronted by a load balancer, the costs here will scale linearly.

DNS Server outside of Kubernetes

During our network migration we faced the problem of forwarding DNS resolution queries from AWS into GCP and vice-versa. Luckily Kubernetes does provide a coredns instance for its internal DNS resolution that we can configure to add forwarding rules to DNS servers outside of the GKE cluster.

# kubectl --namespace kube-system edit configmap coredns

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa { # <--- Default resolver for Kubernetes do not modify

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

example.internal.ops:53 { # DNS hostname to forward, must match

errors

cache 20

forward . 10.1.10.10 # <---- IP of your DNS Server

}

prometheus :9153

forward . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}One Ingress vs Multiple

A typical question when deploying Kubernetes clusters is how to handle incoming traffic into the cluster. There are mostly two ways to go about it.

- One Ingress resource that handles all hosts and routing.

# ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: single-ingress-catchall

spec:

rules:

- host: "app.example.com" # HOST 1

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: api

port:

number: 8080

- host: "api.example.com" # HOST 2

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: frontend

port:

number: 8080

...- One Ingress resource per service.

# ingress-app.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-ingress

spec:

rules:

- host: "app.example.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: api

port:

number: 8080# ingress-api.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: api-ingress

spec:

rules:

- host: "app.example.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: api

port:

number: 8080The decision involved here would depend on whether you want to allow developers to own the routing or not. In our case we had an infrastructure team that handles all routing to all services so we went down with the option of creating a separate Helm Chart that held the Ingress resource. But most teams will want to have their service’s Helm chart contain the Ingress and handle that themselves.

DNS Cutover

When we are eventually ready for the transition, we approach the traffic switching from AWS -> GCP through a DNS cutover.

The cutover itself would be done via the DNS provider such as GoDaddy or Route53, depending on your hosting provider, and would involve an update of the A record and CNAMES of the top level domain, this would be a delicate procedure as there may be MX and TXT records that would be part of the domain. It would be prudent to have both applications running hot on both cloud providers so you can do the switch over and fall back to the old domain should something go terribly wrong. Rollbacks to DNS could take between 1min-10min depending on DNS Propagation.

Lastly…

You can find Part 2 here, where we talk about application infrastructure components and the challenges that we faced when migrating them over and how we solved them.