Background

Another new day and another new requirement descending upon me from the heavens, this time on the Ingress front!. The main requirement is how to migrate from NGINX Ingress to Kong Ingress due to better integration of the underlying micro service architecture as well as a more extensible plugin architecture for some authentication feature that are part of the platform.

Why DBLess?

Kong has a myriad of deployment options which you can view on their site, and also different configuration options. The traditional one, involves a PostgresDB where all changes applied to the Kong Gateway are stored and all Kong Gateway instances connect to. I decided against this option as it generates dependencies and maintaining a database is cumbersome, and if the database goes down so does the ingress. the objective for this new iteration of the ingress was to make it as reliable as possible.

The second option is the Konnect option, in which Kong will split the deployment of the gateway, between data plane and control plane components, handling the control plane of the topology, while the responsibility of the data plane components are relegated to the cluster administrator. Again decided against this, as critical infrastructure components need to handled by system administrators, not to mention the security implications of this. So … not an option.

The final one is DBLess mode which is the one I ended up choosing. This deployment as the name suggests, involves no PostgresDB and is configured using in memory configuration, as it allows for runtime configuration changes of the ingress without restarts and it is consistent across multiple replicas.

Requirements and Limitations

The current environment I was tasked to work in is AWS with EKS, using ACM as the certificate manager integration, which is challenging since ACM generated certificates can’t be stored as Kubernetes Secrets and then loaded and bound the to ingress resource, so some clever architecture workaround had to be put in place.

At the time of this writing I had a choice between Ingress or Gateway API objects in Kubernetes, since I wanted to future proof this I went with Gateway and HTTPRoute resources for configuring the routing within the cluster.

Another limitation of this solution is that Kong only works with L3 Network Load Balancers.

Since there were no security requirements regarding encryption within the cluster at the time (that will be handled by mesh network in a later project), I could just terminate SSL traffic and forward to HTTP ports to kong gateway.

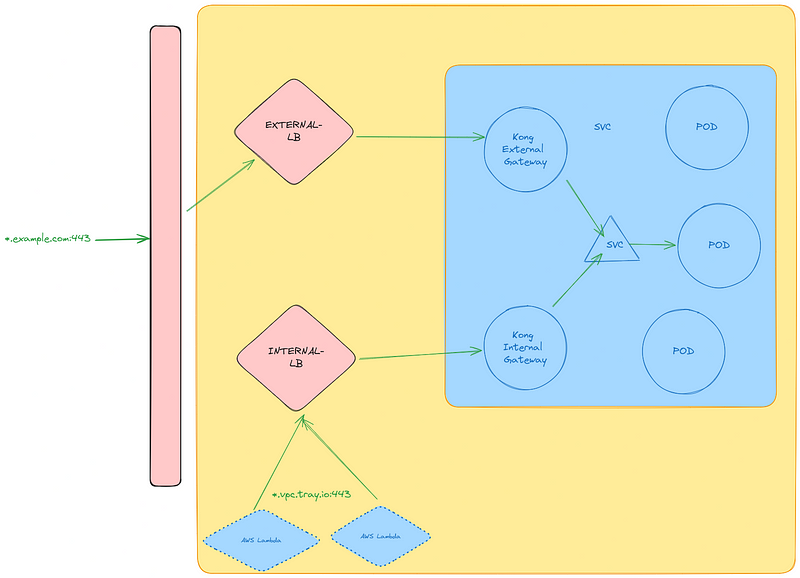

Finally the application has external traffic from the internet and internal traffic used by AWS Lambda components within the same network, this traffic never leaves the internal network and had to be fronted by a different ingress resource.

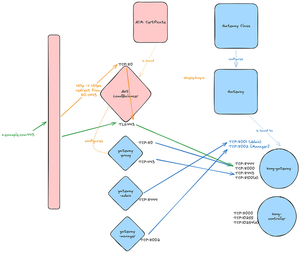

Architecture

A visual representation of the Kong architecture is as follows.

And this is the more high level overview of how the two kong gateways will be deployed relative to each other.

Solution

ALB Ingress Controller

This component allows for the ability to configure AWS Load Balancer and Listeners through Kubernetes Service Annotations. Configure the controller according to your environment.

# values.yaml

image:

tag: v2.7.0

clusterName: <CLUSTER_NAME>

vpcId: <VPC_ID>

region: <REGION_ID>

ingressClass: alb

createIngressClassResource: true

rbac:

create: true

podDisruptionBudget:

maxUnavailable: 1

serviceAccount:

name: alb-ingress-controller

annotations:

eks.amazonaws.com/role-arn: <IAM_ROLE>Run the helm command to install it

$ helm repo add eks https://aws.github.io/eks-charts

$ helm install aws-load-balancer-controller eks/aws-load-balancer-controller \

-n kube-system --values values.yamlIf you want to do it through terraform

resource "helm_release" "alb_ingress_controller" {

name = "alb-ingress-controller"

namespace = "kube-system"

chart = "aws-load-balancer-controller"

repository = "https://aws.github.io/eks-charts"

create_namespace = false

version = "1.7.1"

values = [

"${file("values.yaml")}"

]

}Deploy Kong

For the kong bit, we’ll need to deploy two instances of kong, one to configure external load balancer, and the other for internal traffic.

For this we’ll create a global.yaml that will hold the shared values between deployments for bot internal and external load balancers, and then an internal.yaml and external.yaml that will hold the values specific to the deployment.

I’ll be using the chart at https://charts.konghq.com called ingress .

# global.yaml

controller: # Set up Controller Resources

resources:

limits:

memory: 2G

requests:

cpu: 1

memory: 2G

env:

controller_log_level: "info" # Logging Configuration

proxy_access_log: "off"

autoscaling:

enabled: true

minReplicas: 1

maxReplicas: 5

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

gateway: # Configure Kong Gateway EG: The network traffic proxy

resources:

limits:

memory: 2G

requests:

cpu: 1

memory: 2G

plugins: # Configure the dd-trace plugin for datadog

configMaps:

- pluginName: ddtrace

name: kong-ddtrace

autoscaling:

enabled: true

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 80

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

manager: # Configure Kong Manager

annotations:

konghq.com/protocol: http # Set traffic to http only

enabled: true

http:

containerPort: 8002

enabled: true

servicePort: 8002

tls:

enabled: true # Will not be used, but has to be enabled due to a bug in the helm chart, disabling this causes a deployment misconfiguration.

customEnv: # DataDog configuration for tracing, remove it if you don't need it

dd_trace_agent_url: "http://$(DATADOG_AGENT_HOST):8126"

datadog_agent_host: # DataDog configuration for tracing, remove it if you don't need it

valueFrom:

fieldRef:

fieldPath: status.hostIP

env:

proxy_access_log: "off"

log_level: info

admin_gui_path: "/"

datadog_agent_host:

valueFrom:

fieldRef:

fieldPath: status.hostIP

admin:

type: ClusterIP

enabled: true

http:

enabled: true

tls:

enabled: true

# Disable all kong products we aren't gonna use

enterprise:

enabled: false

portal:

enabled: false

rbac:

enabled: false

smtp:

enabled: false

vitals:

enabled: false

# dblessConfig:

# config: |

# _format_version: "3.0"

# _transform: true

proxy:

enabled: true

annotations:

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-target-group-attributes: "preserve_client_ip.enabled=true"

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: "tcp"

tls:

enabled: true

overrideServiceTargetPort: 8000The important bits are in the gateway.proxy.annotations spec as these annotations are attached to the Load Balancer Service Object that directly configures the AWS Network Load Balancer, these values will be shared with both internal and external Load Balancers.

# internal.yaml

controller:

ingressController:

enabled: true

ingressClass: kong-internal

env:

GATEWAY_API_CONTROLLER_NAME: example.com/internal-gateway-controller

INGRESS_CLASS: internal-ingress-controllers.example.com/kong-internal

gateway:

env:

admin_gui_api_url: "https://kong-internal-manager.staging.example.com/admin"

proxy_listen: 0.0.0.0:8000 so_keepalive=on, [::]:8000 so_keepalive=on, 0.0.0.0:8443 http2 ssl, [::]:8443 http2 ssl

extraLabels:

tags.datadoghq.com/env: staging

tags.datadoghq.com/service: kong

tags.datadoghq.com/version: "3.6.1"

manager:

ingress:

ingressClassName: internal-nginx

enabled: false

hostname: kong-internal-manager.staging.example.com

path: /

pathType: Prefix

type: ClusterIP

proxy:

annotations:

service.beta.kubernetes.io/aws-load-balancer-subnets: subnet-0dc8c6a957559e210,subnet-0e0913f97679a938c,subnet-0d5217c354e553014

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: "arn:aws:acm:eu-west-1:861160335664:certificate/b0a419e8-67e8-4e44-84a5-9d42811718fc"

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: 443

service.beta.kubernetes.io/load-balancer-source-ranges: 10.88.0.0/16,10.89.0.0/16,10.90.0.0/16

service.beta.kubernetes.io/aws-load-balancer-scheme: "internal"

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-name: "kong-internal"

service.beta.kubernetes.io/aws-load-balancer-cross-zone-load-balancing-enabled: "true"

service.beta.kubernetes.io/aws-load-balancer-internal: "true"# external.yaml

controller:

ingressController:

enabled: true

ingressClass: kong-external

ingressClassAnnotations: {}

env:

GATEWAY_API_CONTROLLER_NAME: example.com/external-gateway-controller

INGRESS_CLASS: external-ingress-controllers.example.com/kong-external

gateway:

env:

admin_gui_api_url: "https://kong-external-manager.example.com/admin" # If you want to eanble kong admin api

proxy_listen: 0.0.0.0:8000 so_keepalive=on, [::]:8000 so_keepalive=on, 0.0.0.0:8443 http2 ssl, [::]:8443 http2 ssl #so_keepalive=on is crucial to avoid timeout issues

manager:

ingress:

ingressClassName: external-nginx

enabled: false

hostname: kong-external-manager.example.com

path: /

pathType: Prefix

type: ClusterIP

proxy:

annotations:

service.beta.kubernetes.io/aws-load-balancer-subnets: "<ARRAY_SUBNET_IDS>"

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: "<ARN_ACM_CERTIFICATE>"

service.beta.kubernetes.io/aws-load-balancer-ssl-ports: "443"

service.beta.kubernetes.io/aws-load-balancer-type: external

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip #Set to `ip` for IP binding to pod instead of

service.beta.kubernetes.io/aws-load-balancer-scheme: "internet-facing" #For External, Internet facing Trafic

service.beta.kubernetes.io/aws-load-balancer-name: "kong-external"Again the important bits are in the gateway.proxy.annotations for configuring the AWS Load Balancer.

Finally the commands to deploy this all together

helm repo add kong https://charts.konghq.com

helm repo update

helm install kong-internal kong/ingress \

-f global.yaml \

-f internal.yaml

helm install kong-external kong/ingress \

-f global.yaml \

-f external.yamlThis will bring up two instances of kong and two different AWS Load Balancers.

Doing it through terraform is a bit more complicated, but if you want to know how, hit me up and I can help you set it up.

Configure Plugins

Configuring plug in is quite easy, for example a typical one is the cors plugin.

apiVersion: configuration.konghq.com/v1

kind: KongPlugin

metadata:

name: cors

namespace: kong

plugin: cors

config:

origins:

- "https://example.com"

methods:

- OPTIONS

- GET

- POST

- PUT

- DELETE

- PATCH

headers:

- Authorization

- Content-Type

exposed_headers:

- Authorization

- Content-Type

credentials: true

max_age: 1728000This is simple example you would of course use your own origins for your application.

To enable the plugin on a route, look for your favourite HTTPRoute object and reference the plugin.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

annotations:

konghq.com/plugins: cors # comma separated list of plugins if you want more

# it would be "cors,retry,rate-limit" etc.ACM

The ACM bit is a bit troublesome, since kong cannot bind an AWS ACM Certificate we must use the AWS Load Balancer controller to bind the ACM Certificate to a Kubernetes Service Object Via the chart s value override.

gateway:

proxy:

annotations:

service.beta.kubernetes.io/aws-load-balancer-ssl-cert: "${ACM_CERTIFICATE_ARN}"This is because ACM Certificates cannot be mounted as secrets (As of this writing) and be bound via the Gateway Resources like the following.

# Counter Example, it cannot be done with ACM

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: example-gateway

spec:

gatewayClassName: kong

listeners:

- name: https

port: 443

protocol: HTTPS

hostname: "demo.example.com"

tls:

mode: Terminate

certificateRefs:

- kind: Secret

name: "<ACM_CERTIFICATE_ARN> # Cannot be done with AWS ACM,

# can be used with Cert Manager thoughBenefits

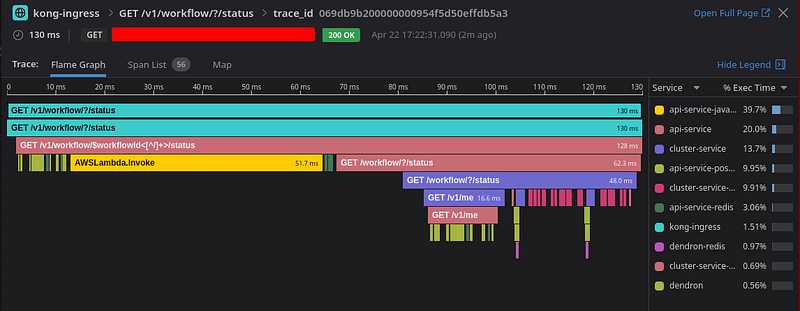

DataDog End to End Tracing

One of the reasons we moved to Kong is for the ability to integrate with its DataDog plugin and have end to end tracing, this new feature is the most coveted by our team as it allows you to see, with pin point accuracy, where the network or application error is happening and what the logs for that particular trace is, reducing the amount of time spent debugging and increasing developer productivity.

Extensible Plugin Architecture

Loading plugins is easy and we can build our own, one of the architecture mistakes that happened throughout the life of the company is that instead of using an API Gateway and adhere to standard ways of authentication, previous generations of engineers decided to create their own “api-service” and authentication methods, using Kong we can create our own plugins to slowly move away from the custom “api-service” implementation and let Kong plugins offload some of that routing and authentication responsibilities, via the plugins to slowly deprecate it.

One such effort is building a forward-auth plugin that will redirect the bearer token to another service and retrieve a secondary token that can be forwared to upstream services. That work is being done here if you are interested. https://github.com/danielftapiar/forward-auth-plugin.

Final Thoughts

This migration took about 3 months, it was 0 down time and no production issues, we did the swap using DNS Weigeted Routing, and had to do it one service at time which was rather slow but safer than doing it all at once and causing production failures. Kong is defintely an improvment over our previous NGINX setup as it is way more extensible and easy to make it interface with third party software, and in the case we can’t we can get into it’s plugin development kit to build it ourselves. So far it’s been a glaring success and our ingress architecture no longer keeps us awake at night.