You may have worked with EKS in the past and one of the first infuriating aspects of setting up your cluster for other users, is the dreaded aws-auth ConfigMap configuration. This arcane piece of crap is by far the worst architectural decision I have ever had the disgust of experiencing and AWS has finally decided to do something about it, introducing Access Entries!

You can read more from the official docs here and migrating here, I’m just gonna talk about my experience with this new feature and what errors I encountered.

First right off the bat, I wanted to do it through terraform and had to update my provider. You need at least version = "5.33.0" for your AWS provider setup.

To enable authentication mode, just add the access_config block to your aws_eks_cluster resource or module and update.

resource "aws_eks_cluster" "cluster" {

name = var.cluster_name

...

...

access_config {

authentication_mode = "API_AND_CONFIG_MAP"

bootstrap_cluster_creator_admin_permissions = false

}A few caveats here, There are three states you can put your cluster on, CONFIG_MAP -> API_AND_CONFIG_MAP ->API . If you have an old cluster you’ll most likely have it setup as CONFIG_MAP, If you’ve recently crated one, it’ll be provisioned as API_AND_CONFIG_MAP, on the first go you can put it in hybrid mode API_AND_CONFIG_MAP . DO NOT jump straight to API , as you could render your cluster useless, as there is no rollback mechanism to go from API -> CONFIG_MAP .

Now as soon as I tried to do this I started getting random errors like this when running terraform plan ,

│ Error: clusterroles.rbac.authorization.k8s.io "view-nodes-and-namespaces" is forbidden: User "staging-admin" cannot get resource "clusterroles" in API group "rbac.authorization.k8s.io" at the cluster scope

│

│ with kubernetes_cluster_role.staging-eng-cluster,

│ on clusterrole-eng.tf line 1, in resource "kubernetes_cluster_role" "staging-eng-cluster":

│ 1: resource "kubernetes_cluster_role" "staging-eng-cluster" {bathis one is specifically to me given my RBAC setup but the way I could get the terraform plan to work is to manually update the EKS cluster via the AWS Interface and then terraform import . Again if you don’t get this carry on!.

Configuration Policy

Once the cluster has been updated to use the new configuration, we proceed with the new policies! Datadog has an amazing write up here, in case you want a deeper dive, but for this post , I’m gonna try to keep it as simple as possible.

The new policies available to your cluster can be shown by running the command.

$ aws eks list-access-policies

{

"accessPolicies": [

{

"name": "AmazonEKSAdminPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSAdminPolicy"

},

{

"name": "AmazonEKSClusterAdminPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy"

},

{

"name": "AmazonEKSEditPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSEditPolicy"

},

{

"name": "AmazonEKSViewPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEKSViewPolicy"

},

{

"name": "AmazonEMRJobPolicy",

"arn": "arn:aws:eks::aws:cluster-access-policy/AmazonEMRJobPolicy"

}

]

}These policies are mapped to the cluster roles you can find in your own cluster

kubectl get clusterrole -l kubernetes.io/bootstrapping=rbac-defaults

NAME CREATED AT

admin 2024-01-03T22:19:06Z

cluster-admin 2024-01-03T22:19:05Z

edit 2024-01-03T22:19:06Z

view 2024-01-03T22:19:06ZAmazonEKSClusterAdminPolicy, maps tocluster-adminrole.AmazonEKSAdminPolicy, maps toadminrole.AmazonEKSEditPolicy, mapseditrole.AmazonEKSViewPolicy, maps toviewrole.

Migration Strategy

So the strategy is quite simple, move the cluster through the three stages and get it to final state API and migrate the contents of the aws-auth ConfigMap into the new EKS access entries. For this exercise we are going to migrate a Karpenter role: KarpenterNodeRole-staging, used for manipulating nodes, and role: staging-eng , which is the role I’m using to log into the cluster. A sample ConfigMap looks like this.

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

# …

- rolearn: arn:aws:iam::xxxxxxxxx:role/aws-reserved/sso.amazonaws.com/AWSReservedSSO_staging-eng_xxxxxxxxxxx

groups: ["staging-eng"]

username: "staging-eng"

- rolearn: arn:aws:iam::xxxxxxxxx:role/KarpenterNodeRole-staging

groups: ["bootstrappers", "nodes"]

username: "node:{{EC2PrivateDNSName}}"For the staging SSO role item, the translation would require these two resources.

resource "aws_eks_access_entry" "staging_eng_entry" {

cluster_name = "<CLUSTER_NAME>"

principal_arn = "arn:aws:iam::xxxxxxxxx:role/aws-reserved/sso.amazonaws.com/AWSReservedSSO_staging-eng_xxxxxxxxxxx"

kubernetes_groups = ["staging-eng"]

user_name = "staging-eng"

type = "STANDARD"

}

resource "aws_eks_access_policy_association" "staging_eng_entry" {

cluster_name = "<CLUSTER_NAME>"

policy_arn = "arn:aws:eks::aws:cluster-access-policy/AmazonEKSEditPolicy"

principal_arn = "arn:aws:iam::xxxxxxxxx:role/aws-reserved/sso.amazonaws.com/AWSReservedSSO_staging-eng_xxxxxxxxxxx"

access_scope {

type = "cluster"

}

}The principal_arn is the IAM role that will be assumed. The groups will translate to target a ClusterRoleBinding by the underlying RBAC system. If you have k9s you can view the default groups.

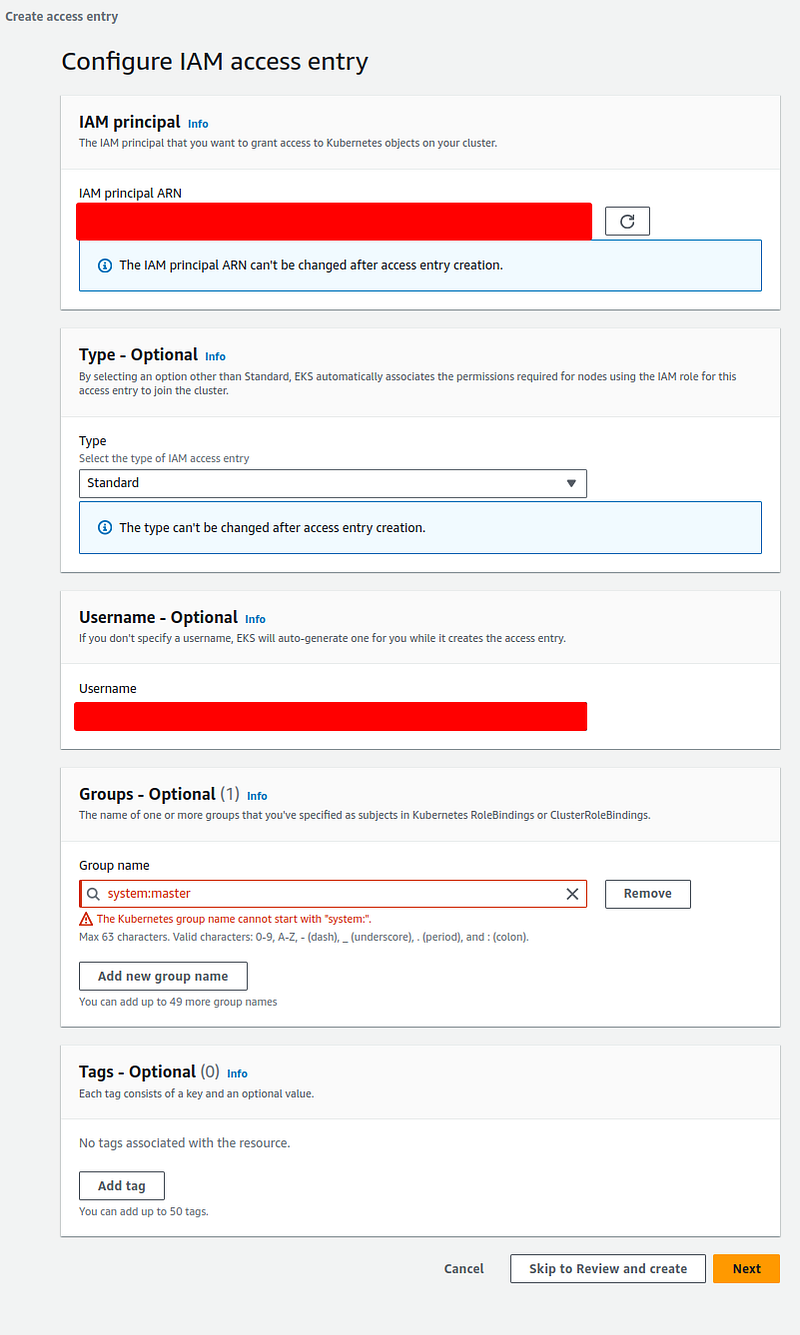

Migrating System Root Permissions

Migrating normal roles and user is pretty straight forward but root permissions are a bit more tricky and are not 1:1 like before. First you can’t migrate system:master groups to this new access entry, if you try you’ll get this error.

│ Error: creating EKS Access Entry (staging:arn:aws:iam::xxxxxxxxxxxx:role/AWSReservedSSO_xxxxxxxxxxxxxxxxxxxxx): operation error EKS: CreateAccessEntry, https response error StatusCode: 400, RequestID: 19b3d598-f96c-4053-bf95-1f2d77e9dee3, InvalidParameterException: The kubernetes group name system:masters is invalid, it cannot start with system:Even if you try to do it from the AWS Console you’ll get the same error.

You’d get the same error when trying to migrate system:bootstrappers and system:nodes like our Karpenter role.

- rolearn: arn:aws:iam::xxxxxxxxx:role/KarpenterNodeRole-staging

groups: ["bootstrappers", "nodes"]

username: "node:{{EC2PrivateDNSName}}"Now the answer to this is quite simple, you just use the type = “EC2_LINUX” and your principal_arn like this.

resource "aws_eks_access_entry" "karpenter_entry" {

cluster_name = "<CLUSTER_NAME>"

principal_arn = "arn:aws:iam::xxxxxxxxx:role/KarpenterNodeRole-staging"

type = "EC2_LINUX"

}No username, no groups, more clean, and that’s it!.

Considerations

When performing the migration, be sure to duplicate whatever role/user you are using to authenticate to your cluster using kubectl by creating two IAM roles , two access entries, and two entries in the aws-auth ConfigMap that act as a backup in case a role fails to authenticate, this because if you are modifying the entry that you are currently using to run kubectl commands and miss configure something due to a typo or random error, and you will get unauthorized responses when trying to connect to your cluster, so a good approach to perform this safely is to run this like a blue-green deployment with two roles.